An augmented reality assessment designed to test astronaut adjustment to gravity changes

It’s not safe to assume astronauts are ready for mission tasks following spaceflights.

It’s not safe to assume astronauts are ready for mission tasks following spaceflights.

When shifting from microgravity of a spacecraft to a gravity-rich environment of the Moon or Mars, astronauts experience deficits in perceptual and motor functions. The vestibular system in the inner ear, which detects the position and movement of the head, must adjust to reinterpret new gravity cues.

A University of Michigan-led team, including researchers from the University of Colorado Boulder’s Bioastronautics Lab and NASA’s Neuroscience Lab at Johnson Space Center, developed a multidirectional tapping task administered in augmented reality (AR) to detect sensorimotor impairments similar to those observed in astronauts after spaceflight.

The results, published in Aerospace Medicine and Human Performance, could support mission operations decisions by establishing when astronauts are able to perform tasks that require full coordination, like piloting vehicles or operating other complex systems.

Field tests to assess sensorimotor impairment have previously been conducted upon the return of International Space Station crew members to Earth. Most of the crew fully recovered the ability to perform vestibular coordination tests within two to four days after landing. However, crew members received intensive treatment from strength, conditioning and rehabilitation specialists during their recovery.

When making gravity transitions to destinations beyond Earth, astronauts will need a method to test recovery within the limited space of their spacecraft without the assistance of experts.

“Space is really a type of telehealth where we need to make decisions without the experts present. Tools to support that decision-making can make future space missions more efficient and help decrease risks,” said Leia Stirling, co-author on the paper and an associate professor of industrial and operations engineering and robotics at the University of Michigan.

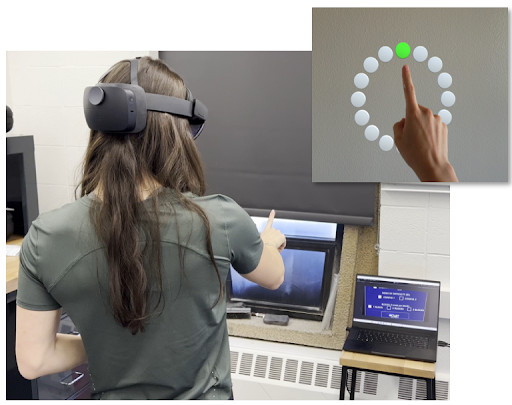

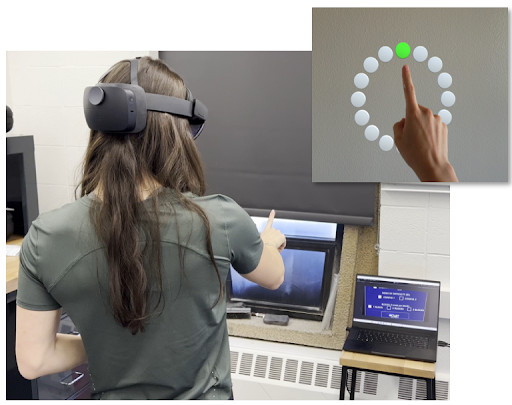

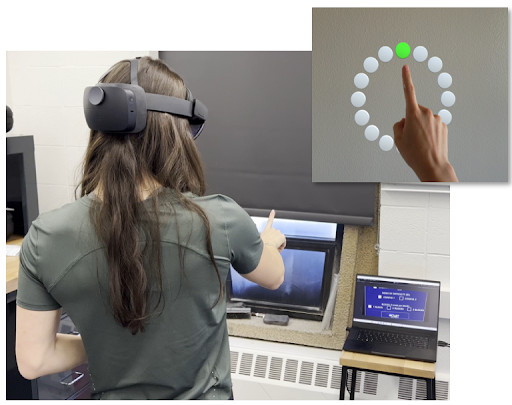

The research team developed a hand-eye coordination task, viewed through AR glasses, as a lightweight and space-conscious solution. This format enables hand and eye tracking while allowing users to view their physical surroundings along with computer generated perceptual information.

AR facilitates the development of tailored assessments, adapting functional tasks to meet mission requirements or individual crew needs. Leveraging embedded sensors, these AR-based evaluations track and analyze astronauts’ hand-eye coordination, head kinematics, and task-specific performance metrics, offering valuable insights into their sensorimotor capabilities.

“Data from AR-based evaluations enable targeted feedback and the creation of personalized rehabilitation programs or countermeasures,” said Hannah Weiss, co-author on the paper and doctoral graduate from the University of Michigan.

The hand-eye coordination task features 16 targets—adapted from an established human-computer interaction standard—holographically projected in the user’s physical space and arranged in an equidistant circular array. The aim is to tap the targets as quickly and accurately as possible in a predetermined sequence.

To test the impact of vestibular disruption on this task, the researchers applied electrical stimulation to the study participants’ mastoid processes, just behind the ear, to disrupt their sensation of motion. Based on participants’ swaying motion, the resulting vestibular impairment simulated the vestibular disorientation astronauts would experience one to four hours post flight.

Both the speed and accuracy of tapping targets decreased after vestibular stimulation, indicating this type of impairment may hinder a crew’s ability to acquire known target locations while in a static standing posture. Head linear accelerations also increased indicating the attempt to keep balance interfered with their performance.

Future research efforts will explore balance and mobility tasks to complement this hand-eye coordination assessment to provide a clearer picture of an astronaut’s adjustment to local gravity. Before deployment, determining readiness thresholds will also be necessary to guide decisions. Weiss, now a Human Factors Research Engineer at NASA Johnson Space Center, is extending this work to support astronaut testing.

“We will be testing this task in microgravity through a program at Aurelia Aerospace that enables students to perform studies in simulated microgravity using parabolic flight,” said Sitrling.

“Sensorimotor challenges pose major risks to crewmembers, and we are working towards using electrical vestibular stimulation to train astronauts to operate in these impaired states prior to spaceflight to improve their outcomes,” said Aaron Allred, first author on the paper and a doctoral student of Bioastronautics at the University of Colorado Boulder.

“Here on Earth, the assessments and impairment paradigms we are developing could inform telehealth patient care, such as for those who experience vestibular loss with age,” added Allred.

Additional co-author: Torin K. Clark of the University of Colorado Boulder.

This work was funded by NASA Contract 80NSSC20K0409 and a NASA Space Technology Graduate Research Opportunities Award.

Full citation: “An augmented reality hand-eye sensorimotor impairment assessment for spaceflight operations,” Aaron R. Allred, Hannah Weiss, Torin K. Clark, and Leia Stirling, Aerospace Medicine and Human Performance (2024). DOI: 10.3357/AMHP.6313.2024

Written by Patricia Delacey, University of Michigan College of Engineering