Explainable AI: New framework increases transparency in decision-making systems

Achieving high interpretability and accuracy at a low computational cost, the technique can improve medical diagnostics with trustworthy AI

Achieving high interpretability and accuracy at a low computational cost, the technique can improve medical diagnostics with trustworthy AI

Written by: Patricia DeLacey, University of Michigan College of Engineering

A new explainable AI technique transparently classifies images without compromising accuracy. The method, developed at the University of Michigan, opens up AI for situations where understanding why a decision was made is just as important as the decision itself, like medical diagnostics.

If an AI model flags a tumor as malignant without specifying what prompted the result—like size, shape or a shadow in the image—doctors cannot verify the result or explain it to the patient. Worse, the model may have picked up on misleading patterns in the data that humans would recognize as irrelevant.

“We need AI systems we can trust, especially in high-stakes areas like healthcare. If we don’t understand how a model makes decisions, we can’t safely rely on it. I want to help build AI that’s not only accurate, but also transparent and easy to interpret,” said Salar Fattahi, an assistant professor of industrial and operations engineering at U-M and senior author of the study to be presented the afternoon of July 17 at the International Conference on Machine Learning in Vancouver, British Columbia.

When classifying an image, AI models associate vectors of numbers with specific concepts. These number sets, called concept embeddings, can help AI locate things like “fracture,” “arthritis” or “healthy bone” in an X-ray. Explainable AI works to make concept embeddings interpretable—meaning a person can understand what the numbers represent and how they influence the model’s decisions.

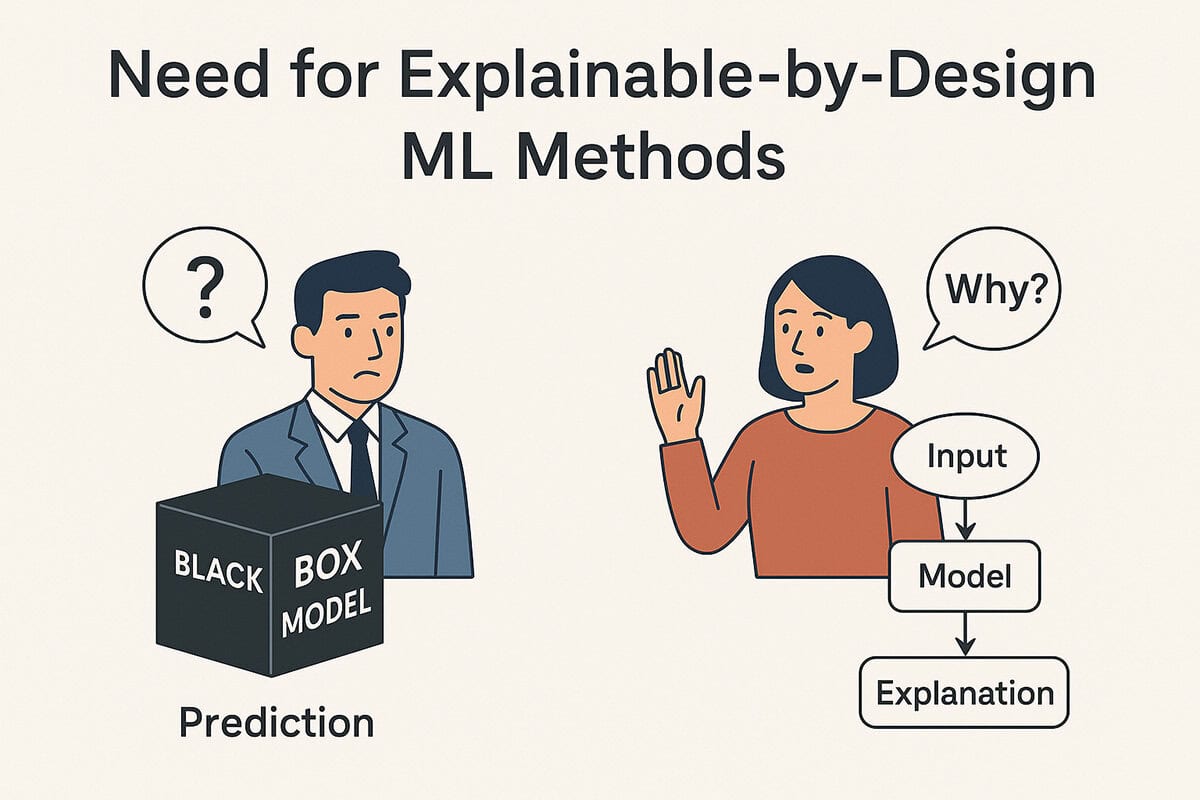

Previous explainable AI methods add interpretability features after the model is already built. While these approaches can identify key factors that influenced model predictions, they counterintuitively are not explainable themselves. These models also treat concept embeddings as fixed numerical vectors, ignoring potential errors or misrepresentations inherent in them. For instance, these models embed the concept of “healthy bone” using a pretrained multimodal model such as CLIP. Unlike carefully curated datasets, CLIP is trained on large-scale, noisy image-text pairs scraped from the internet. These pairs often include mislabeled data, vague descriptions or biologically incorrect associations, leading to inconsistencies in the resulting embeddings.

The new framework—Constrained Concept Refinement or CCR—addresses the first problem by embedding and optimizing interpretability directly into the model’s architecture. It solves the second by introducing flexibility in concept embeddings, allowing them to adapt to the specific task at hand.

Users can toggle the framework to favor interpretability, with more concept embedding restrictions, or accuracy by allowing concept embeddings to stray a bit more. This added flexibility allows the potentially inaccurate concept embedding of “healthy bone”—as obtained from CLIP—to be automatically adjusted and corrected by adapting to the available data. By leveraging this additional flexibility, the CCR approach can enhance both the interpretability and accuracy of the model.

“What surprised me most was realizing that interpretability doesn’t have to come at the cost of accuracy. In fact, with the right approach, it’s possible to achieve both—clear, explainable decisions and strong performance—in a simple and effective way,” said Fattahi.

CCR outperformed two explainable methods (CLIP-IP-OMP and label-free CBM) in prediction accuracy while preserving interpretability when tested on three image classification benchmarks (CIFAR10/100, Image Net, Places365). Importantly, the new method reduced runtime tenfold, offering better performance with lower computational cost.

“Although our current experiments focus on image classification, the method’s low implementation cost and ease of tuning suggest strong potential for broader applicability across diverse machine learning domains,” said Geyu Liang, a doctoral graduate of industrial and operations engineering at U-M and lead author of the study.

For instance, AI is increasingly integrated into who qualifies for loans, but without explainability, applicants are left in the dark when rejected. Explainable AI can increase transparency and fairness in finance, ensuring a decision was based on specific factors like income or credit history rather than biased or unrelated information.

“We’ve only scratched the surface. What excites me most is that our work offers strong evidence that explainability can be brought into modern AI in a surprisingly efficient and low-cost way,” said Fattahi.

Large-scale experiments were run on the U-M Great Lakes Cluster.

This work is supported by the National Science Foundation (DMS-2152776) and the Office of Naval Research (N00014-22-1-2127).

Full citation: “Enhancing performance of explainable AI models with constrained concept refinement,” Geyu Liang, Senne Michielssen, and Salar Fattahi, Proceedings of the 42nd International Conference on Machine Learning (2025). DOI: 10.48550/arXiv.2502.06775